My father recently told me that despite having used Linux for a few years, he's still a bit unclear on the terminology and how all the pieces fit together, so I'm going to explain it all here! I'll also explain a bit about how the pieces work along the way.

I will assume that the reader knows a few things about hardware. If your memory needs refreshing, here are a few facts:

- Memory or RAM is volatile (the contents disappear when the computer is turned off), speedy storage that is used to hold data that is expected to be operated on soon (or not soon, but on very short notice).

- The processor or CPU is the part that actually operates on data. It can perform various mathematical operations, load data from RAM into its registers (extremely fast storage for data that is actively being operated on), and store data into RAM, among other things.

- Disk (either traditional spinning magnetic platters or modern flash-based storage) is non-volatile, being used to store data that needs to stick around when the power is turned off. Disks (even obscenely fast, enterprise-grade flash storage) are quite slow compared to RAM.

The Linux Kernel

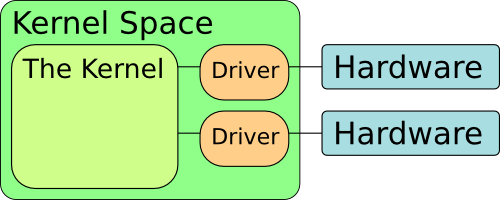

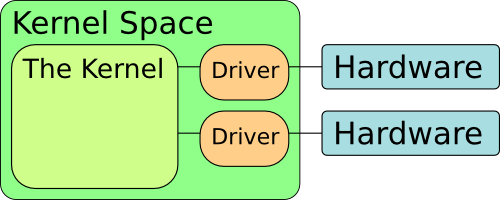

First, there's the Linux kernel. It's where the name "Linux" comes from. An operating system kernel is the very heart of the system. Its purpose is to provide an abstraction over the hardware and to provide some low-level management services. Let's go over these one at a time.

Hardware Abstraction

Suppose you want to write a program that makes sounds. In the bad old days of DOS, you would need to write custom code for every single model of sound card you wanted to support. If you wanted to support every sound card on the market, that would be quite a lot of work. If you left one out, then anybody with that sound card would be left high and dry. Games of those days would actually ask the user what sound card the computer had during installation of the game. If yours wasn't on the list, too bad.

Modern operating systems like Linux and Windows provide abstractions for things like sound. The operating system kernel defines an API (application programming interface; essentially a collection of names of programming routines) for manipulating sound hardware, and the companies that make sound cards write pieces of code called drivers that implement the functionality behind that API. In other words, the operating system defines what a sound driver "looks" like, and the sound hardware vendors write code that "looks" that way. Thus, your program only needs to use the operating system's sound API, and it will work with any card on the market. It will even work with cards you haven't even heard of and cards that haven't been invented yet.

The exact mechanism by which driver code runs depends on the OS, the subsystem the driver is part of, and the design choices made by the people who wrote the driver. Historically, a "driver" has been code that is loaded into the kernel and runs as part of it, rather than as a separate program. Today, it's less clear-cut. Some drivers may run entirely outside the kernel, while others have multiple components, with some running inside the kernel and some running outside of it. Microkernels try to put all drivers outside of the kernel, although these types of kernel haven't seen much use outside of research. Most drivers in Linux and Windows at least have a component that runs as part of the kernel, though.

Management

In the bad old days of DOS, you could only run one program at a time. After all, your computer only has one CPU, right? How could more than one program run at once? Well, strictly speaking, you can't have more than one program running at the same time. However, modern operating systems can provide the illusion of such by rapidly switching between multiple programs, letting each one run for a bit before switching to another. (I'm not going to discuss the multi-core chips typically found in modern computers; they don't actually change the situation all that much.) In ye olden days of Windows 3.1, programs were cooperatively multi-tasked. This means the programmer would have to decide where "good stopping points" were in the code and insert "yield" commands at those points. Whenever a program yielded, control would return to the operating system, which would choose a new program to cede control to.

There were a lot of ways this could go very, very wrong. If the programmer didn't choose the yield points well, the program could cause other programs to run unreasonably slowly. If one program went into an infinite loop, it would never yield control, and the entire system would lock up. Modern operating systems preemptively multitask. This means that the kernel tells the CPU to periodically interrupt the running program and run a piece of kernel code that checks if something else should run instead. That means that a program that goes into an infinite loop can't bring the entire system to a halt, as the OS will ensure that other programs get their turn.

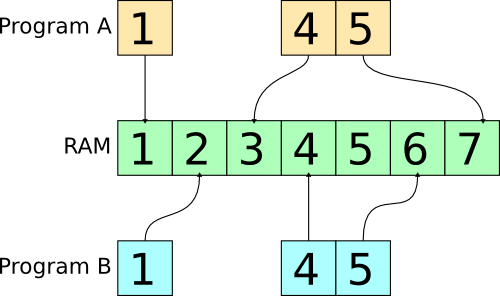

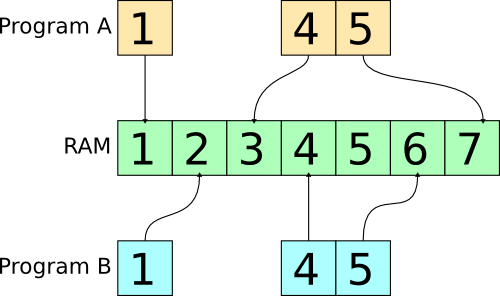

Modern operating systems also provide memory protection. For a long time now, CPUs have had virtual memory features, which let the operating system give each running program its own "view" of memory. Each program sees itself as having access to every memory address on the machine, and the hardware reinterprets the addresses behind the program's back (all set up by the operating system). In the days of Windows 3.1, there was no such thing. If Program A was storing an important bit of data at memory address 100 and Program B malfunctioned and wrote garbage over memory address 100, then Program A would crash or behave incorrectly. With virtual memory, Program A's memory address 100 and Program B's memory address 100 are not the same physical memory address, so Program B's malfunction can only harm Program B. Virtual memory systems also enable something that, confusingly, is often referred to as "virtual memory": paging/swapping. This lets the operating system swap chunks of memory (called "pages")

out to a file on the hard disk when available RAM is low. Using it makes things very slow, but that can be better than crashing due to lack of available memory.

Privilege Levels

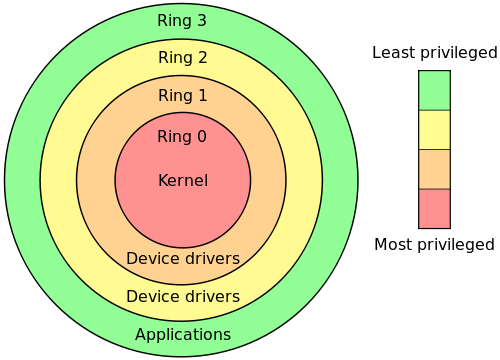

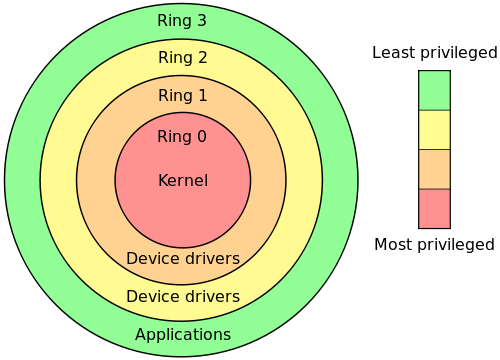

There are some CPU instructions that are dangerous. They may fiddle with hardware or provide raw access to memory. To assist in the development of secure and robust operating systems, CPUs have privilege levels. CPUs may provide many privilege levels, but only two are typically used. These two are often referred to as "kernel mode" and "user mode". Kernel mode is the mode in which the kernel and drivers run. In kernel mode, you can do anything. Everything else runs in user mode, where any attempt to use a dangerous instruction will halt execution. This helps limit the damage that can be done by a faulty or malicious program, at the cost of a small performance penalty: if a user mode program asks the kernel to do something, the CPU has to transition out of user mode into kernel mode and then back again.

(source)

(source)

Bootloaders

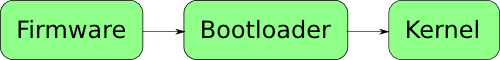

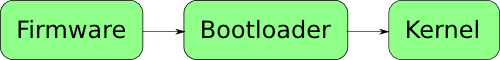

In order for the kernel to run, it needs to be loaded into memory, and then the CPU needs to be told to start running it. The bootloader is what does this. Typically, Linux systems use the Grand Unified Bootloader (GRUB). This is simply a piece of code that provides the ability to load a kernel into memory and run it and a menu that can be used to select among multiple operating systems (usually; some of the more simplistic bootloaders don't). It also typically loads a chunk of data called the initrd or initramfs, which provides the kernel with some things it needs very early on in the boot process. For example, if all of your drivers are on a disk, then you need to load the driver for the disk controller before you can load any drivers. Thus, the initrd almost always contains disk controller drivers. As for what loads the bootloader and allows it to load the initrd and kernel without having drivers of its own, well, that's the system's firmware, which is machine-dependent and a very complex

topic in its own right (I could write an entire series of articles on firmware and still leave a lot out). Read up on BIOS and UEFI if you're interested.

Windows has a bootloader, too. For Windows XP, it's NTLDR. For Windows Vista and later, it's the Windows Boot Manager and winload.exe. These pieces of software are somewhat more obscure than GRUB; on a typical computer with Windows on it, Windows is the only OS, so the user never sees NTLDR, WBM, or winload.exe. Linux systems tend to share a machine with Windows ("dual boot"), and GRUB is used to let the user decide which operating system to boot. Furthermore, it is fairly common for machines with just a single Linux installation to show the GRUB menu for a few seconds anyway, although Ubuntu has started hiding the menu on single-OS machines unless the user holds down the Shift key while booting.

That's it for part 1. Next time, I'll talk about the userland.

(

(